Painless Status Reporting in GitHub Pull Requests - Designing CI/CD Systems

Continuing the build service discussion from the Designing CI/CD Systems series, we’re now at a good point to look at reporting status as code passes through the system.

At the very minimum, you want to communicate build results to our users, but it’s worth examining other steps in the process that also provide useful information.

The code for reporting status isn’t a major feat. However, using it to enforce build workflows can get complicated when implemented from scratch.

Continue reading9 Organizational Test Practices Guaranteed to Lower Quality and Customer Satisfaction

A contribution to freeCodeCamp.org examining test practices opposite to our usual goals.

Command Execution Tricks with Subprocess - Designing CI/CD Systems

The most crucial step in any continuous integration process is the one that executes build instructions and tests their output. There’s an infinite number of ways to implement this step ranging from a simple shell script to a complex task system.

Keeping with the principles of simplicity and practicality, today we’ll look at continuing the series on Designing CI/CD Systems with our implementation of the execution script.

Continue readingConveying Build and Test Information with Repository Badges

When you check out a repository on github, sometimes theres a little bit of flare at the top of the project that catches your eye. This bit of flare is called a badge and can be used to indicate build status, test coverage, documentation generation status, version support, software compatibilty statements or even community links to gitter or discord where you can find more help with the project. I used to think that badges were fancy fluff people added to their projects to make them seem more professional. Continue readingEasy Clustering with Docker Swarm - Designing CI/CD Systems

Building code is sometimes as simple as executing a script. But a full-featured build system requires a lot more supporting infrastructure to handle multiple build requests at the same time, manage compute resources, distribute artifacts, etc. After our last chapter discussing build events, this next iteration in the CI/CD design series covers how to spin-up a container inside Docker Swarm to run a build and test it. What is Docker Swarm When running the Docker engine in Swarm mode your effectively creating a cluster. Continue readingAwesome Webhook Handling with Sanic - Designing CI/CD Systems

After covering how to design a build pipeline and define build directives in the continuous builds series, it’s time to look at handling events from a code repository.

As internet standards evolved over the years, the HTTP protocol has become more prevalent. It’s easier to route, simpler to implement and even more reliable. This ubiquity makes it easier for applications that traverse or live on the public internet to communicate with each other. As a result of this, the idea of webhooks came to be as an “event-over-http” mechanism.

With GitHub as the repository management platform, we have the advantage of using their webhook system to communicate user actions over the internet and into our build pipeline.

Continue reading4 Attempts at Packaging Python as an Executable

A few years back I researched how to create a single-file executable of a Python application. Back then, the goal was to make a desktop interface that included other files and binaries in one bundle. Using PyInstaller I built a single binary file that could execute across platforms and looked just like any other application.

Fast forward until today and I have a similar need, but a different use case. I want to run Python code inside a Docker container, but the container image cannot require a Python installation.

Instead of blindly repeating what I tried last time, I decided to investigate more alternatives and discuss them here.

Continue readingEffortless Parsing of Build Specifications - Designing CI/CD Systems

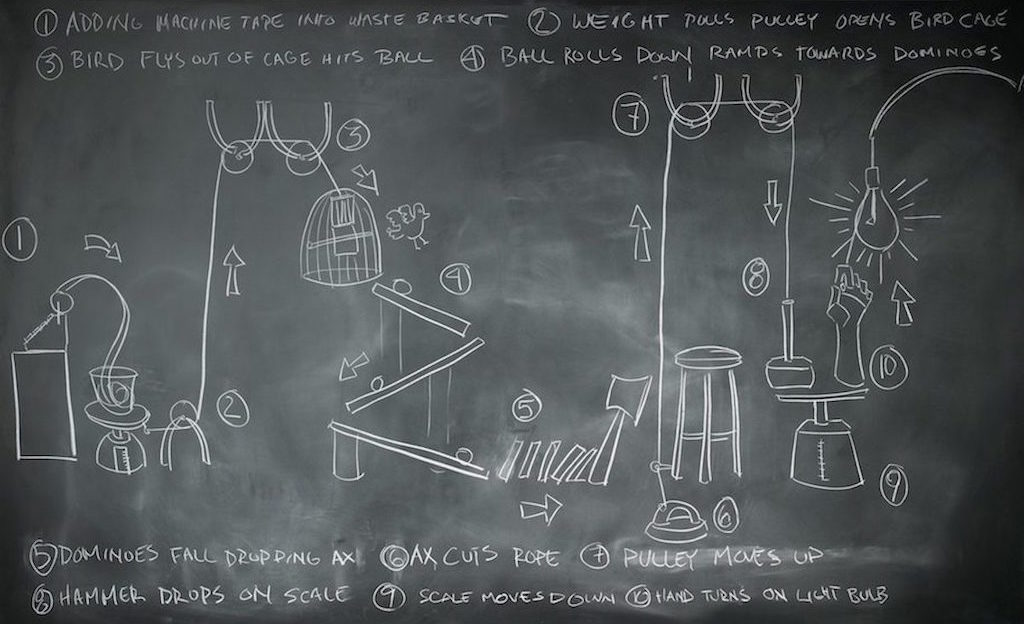

Every code repository is different. The execution environment, the framework, the deliverables, or even the linters, all need some sort of customization. Creating a flexible build system requires a mechanism that specifies the steps to follow at different stages of a pipeline.

As the next chapter in the Comprehensive CI/CD Pipeline and System Design series, this article examines which instructions you’ll want to convey to your custom system and how to parse them. The focus is around a common solution, adding a file into the repository’s root directory that’s read by your execution engine when receiving new webhooks.

Continue readingBest Tips for Running Enterprise CI/CD in the Cloud

There are many solutions to building code, some of them are available as cloud services, others run on your own infrastructure, on private clouds or all of the above. They make it easy to create custom pipelines, as well as simple testing and packaging solutions. Some even offer open source feature-limited “community editions” to download and run on-premises for free.

Following is my experience on the important aspects to consider when deciding on a build system for your organization that depends on cloud services. The original intent was to include a detailed review of various online solutions, but I decided to leave that for another time. Instead, I’m speaking more from an enterprise viewpoint, which is closer to reality in a large organization than the usual how-to’s. We’re here to discuss the implications and the practicality of doing so.

Continue readingComprehensive CI/CD System Design

Continuous integration and delivery is finally becoming a common goal for teams of all sizes. After building a couple of these systems at small and medium scales, I wanted to write down ideas, design choices and lessons learned. This post is the first in a series that explores the design of a custom build system created around common development workflows, using off-the-shelf components where possible. You’ll get an understanding of the basic components, how they interact, and maybe an open source project with example code from which to start your own.

Continue readingIntegrating Pytest Results with GitHub

When joining a new engineering team, one of the first things I do is familiarize myself with the dev and test processes. Especially the tools used to enforce them. In the past 5 years or so, I’ve noticed that a lot of organizations still use older tools that haven’t yet evolved to support modern practices. Even teams that purely develop software can find themselves working around cumbersome systems that hinder instead of enable.

What do I mean by that? Very few of these tools include useful interfaces to leverage integrations with other systems (like REST APIs). Most have no concept of modern dev practices like continuous integration or containerization. Almost all of them want to record pass / fail at a step by step basis as if you’re executing manually. The vast majority are built around a separation between test and dev (some even emphasize it). And a lot of them require the organization to hire “specialists” for the purpose of “customizing” the tool to the team. In my opinion, these types of systems coerce the organization to emphasize blame over quality and team boundaries over productivity.

I’ve been very successful at building long-lived alternatives to these systems in several organizations. I’ve done it enough to know which features are worth including, and which to leave to the test / dev engineers, especially after the advent of continuous integration and delivery.

Continue readingPracticality Beats Purity - Pure SQL vs ORMs

I’ve been using some form of a database throughout the entirety of my career. Sometimes single-file databases, sometimes full servers. Sometimes testing them, sometimes designing them, but a lot of times I was optimizing them. Even when learning programming with my dad, most of the apps I built were about storing and managing some type of data.

With all those years of experience, I definitely understand enough to know that I’m by no means an expert at any of it. There are several (an understatement) mechanisms by which folks make the best use of their database servers, almost all of them are tradeoffs in memory usage, space, look-up times, results retrieval, backup mechanisms, etc.

As expected, each database mechanism has their own quirks and optimizations, but the common theme is the language which you use to retrieve information: Structured Query Language (SQL). Different database engines implement different extensions to this language, some of which add powerful functionality, some of which just add confusion. But in general, SQL has been very successful in standardization across the industry.

This next chapter in the Practicality Beats Purity series covers the tradeoffs when using direct SQL queries to a database vs programming language abstractions that do it for you, like ORMs.

Continue readingPyBites: How Promotions work in Large Corporations

A collaboration with Bob and Julian from PyBites on soft skills.

Asyncio in Python 3.7

The release of Python 3.7 introduced a number of changes into the async world. There are a lot of quality-of-life improvements, some affect compatibility across versions and some are new features to help manage concurrency better. This article will go over the changes, their implications and the things you’ll want to watch out for if you’re a maintainer. Some may even affect you even if you don’t use asyncio.

Continue readingPython Bytes Episode 83: from __future__ import braces

Brian Okken and Michael Kennedy had me on as a special guest this week at Python Bytes. We discussed some recent python headlines: Code with Mu, Python parenthesis primer, Python for Qt Released, Itertools in Python 3, Python Sets and Set Theory, Python 3.7 coming soon!TalkPython Episode 166 - Continuous Delivery with Python

Michael Kennedy from the TalkPython podcast was kind enough to have me on the show to discuss continuous delivery and continuous integration with python. We go over different the different workflows, tooling and python modules that can help get things going.General Data Protection Regulation

Ying Li’s PyCon2018 keynote discussed the importance of writing secure software, and the responsibility that we, as developers, have in keeping users safe. While watching, it occurred to me that I haven’t written about the new European Union laws that stem from the General Data Protection Regulation (GDPR) that goes into effect this month.

I was involved in several conversations on this topic lately, so here’s some information on the rules, their implications and responses from businesses, and the reactions as the rest of the world tries to implement similar systems.

Continue readingPracticality Beats Purity - Microservices vs Monoliths

In recent years there’s a growing trend to move away from large all-in-one applications. These “monoliths”, developed with one codebase and delivered as one large system, are hard to maintain. In their place, the industry now favors splitting-off the component systems into individual services. As separate “microservices”, they perform the smallest functions possible grouped into logical units. They are independent deliverables, deployable, replaceable and upgradeable on their own.

Going further into the Practicality Beats Purity series, this article will cover the implications of transitioning to a microservices architecture.

Continue reading